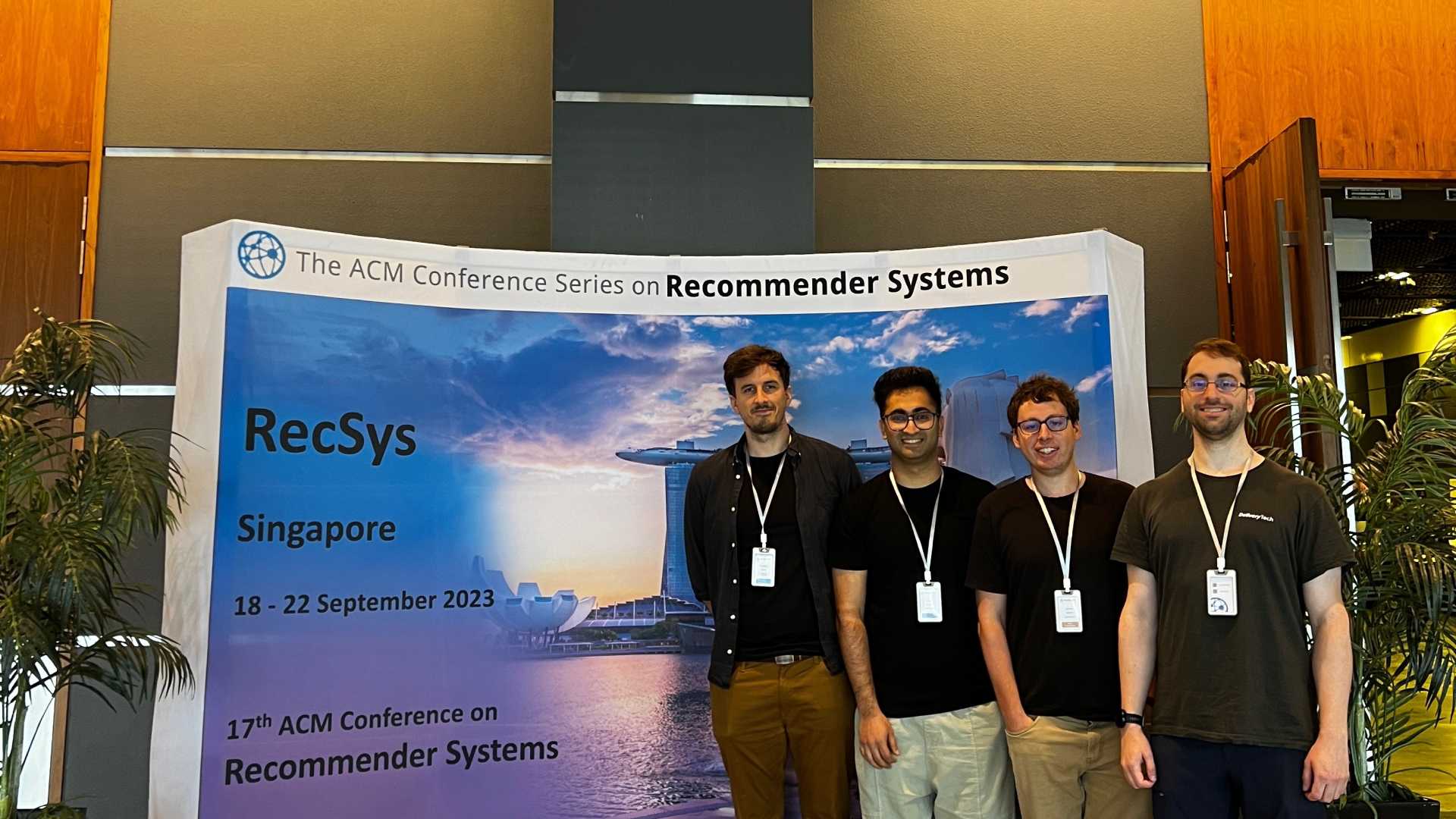

ACM RecSys is a top-tier conference on Recommendation Systems. The 17th edition of this annual event is taking place in Singapore where two of our papers have been accepted. This post provides a brief overview of our work.

At Delivery Hero, we passionately support innovation and research. This dedication is reflected in our significant representation at this year’s esteemed ACM RecSys Conference, where we’re excited to present not just one, but two accepted papers. This achievement is a testament to our commitment to fostering a collaborative work environment and supporting our team’s contributions to the broader community. By encouraging our data scientists to share their innovative work, we aim to enrich the field of machine learning/recommendations and promote wider understanding. As we presented our work in Singapore for the conference, we look forward to more insightful discussions and invite you to delve into our exciting research.

The ACM RecSys Conference

The ACM Conference on Recommender Systems (RecSys) is the leading global forum for new findings in the field of recommender systems. It unites prominent international research groups and leading e-commerce corporations to discuss and share the latest research. RecSys 2023 is the 17th iteration and will take place in Singapore this year. The conference also co-hosts a number of workshops focusing on key challenges in the recommendation space.

Our Work

At Delivery Hero, we tackle a variety of challenging problems across multiple domains. Recommendations are one of the key focus areas for our data science teams, especially given the diverse components within our apps (yes, we have a number of products across markets and our models power a number of key features!). Our accepted works[1] [2] at this year’s RecSys are an outcome of focused work to solve some of the key challenges and dedication to share our findings with a larger audience.

Delivery Hero Recommendation Dataset: A Novel Dataset for Benchmarking Recommendation Algorithms

Recommendation Engines, like every other learning setup, are data-hungry systems. But unlike domains such as NLP and Computer Vision, the recommendation space hasn’t seen enough open-source datasets that enable researchers and practitioners to explore and push the boundaries. As part of this effort, the authors explored a number of datasets and went through a detailed analysis to understand what these datasets provide, what is lacking and what use cases can be powered. This helped them come up with a list of key characteristics a data set should have in order to be useful for researchers in the recommendation space. For instance, any such dataset should be easily accessible, enable reproducibility of experiments, should be representative of real-world settings and have large enough volume.

The result was a dataset consisting of ~4 Million actual orders from 3 cities across different regions serviced by our apps. The dataset is also supplemented with information related to thousands of vendors along with millions of products they serve. Being a food delivery service, it is imperative that the dataset also provides geographical information given the hyper-local nature of the domain. The team came up with novel approaches to ensure no Personal Identifiable Information (PII) information is exposed while maintaining the representativeness of the dataset.

The dataset aims to power a number of different tasks not just in the recommendation space but also in the NLP and unsupervised learning space as well. For instance, the challenges associated with non-trivial cuisines and product names would motivate researchers to explore novel pre-processing techniques to reduce sparsity, better product matching, etc.

All of this and more is detailed in the paper and the corresponding repository. Check it out here ????.

Offline Evaluation Using Interactions to Decide Cross-selling Recommendations Algorithm for Online Food Delivery

Online A/B Tests are the gold standard when it comes to testing recommendation systems. Yet these tests are complex to set up, time-consuming and effort-intensive. Not to mention the risk of impacting business metrics if not done correctly. Offline evaluation on the other hand is far simpler to implement but is limited by biases, noise and other issues in data and setup. More often than not, offline and online evaluations might not agree in terms of model performance.

The Cart Offline Evaluation Framework (COEF) presented in this paper is one such step in the direction of mitigating some of the deficiencies in offline evaluation. The COEF coupled with custom evaluation metrics such as Cart Value Impact (cvi @ K), Upsell @ K , Downsell @ K and mWAP @ K enable our data scientists to iterate faster by relying on robust offline evaluation results.

The paper goes into the details of two specific algorithms to build an offline evaluation dataset complete with key interactions like Add to Cart and Remove from Cart. These algorithms when combined with custom metrics are able to directionally match the performance of our models in offline and online test settings. The paper also presents results from multiple experiments to support the efficacy of this work.

All of this and more is detailed in the paper and the corresponding repository. Check it out here ????.

Acknowledgements

These works are the results of countless hours of hard work and discussions among the authors — Yernat Assylbekov, Raghav Bali, Luke Bovard, Christian Klaue and Manchit Madan, an amazing team of reviewers both within the team and organization in general along with an amazingly supportive leadership — Sebastian Rodriguez, Koray Kayir, and Ankur Kaul.

If you like what you’ve read and you’re someone who wants to work on open, interesting projects in a caring environment, we’re on the lookout for Data Architect – Principal Machine Learning Engineers and Data Science Managers. Check out our full list of open roles here – from Backend to Frontend and everything in between. We’d love to have you on board for an amazing journey ahead.