Learn how the BADA (Baemin Advanced Data Analytics) team at Woowa Brothers developed an LLM-based agentic product to enhance data literacy.

AI data analyst, QueryAnswerBird (QAB) is a product born from the Woowa Hackathon 2023 —an internal hackathon in which any Woowa Brothers employee can participate — held under the theme of generative AI.

At the hackathon, it was presented as a service that automatically extracts and visualizes data without the need for coding and won first place.

Even after the hackathon, there was high demand and interest from employees so much so that the Langineer Task Force was established for full-scale development in January 2024. For the last six months, the task force has developed QAB even further so that it can generate queries and interpret queries, validate query syntax, explore tables, and log table utilization guides.

Now let us take you through how the task force members worked tirelessly over the last six months to develop the groundbreaking AI service, QueryAnswerBird (QAB).

1. WHY did we get together again?

1.1 Problem identification

Before establishing the task force, we had to reidentify the problems that needed to be solved and clearly set the direction for product development. To do this, we conducted a company-wide survey on the status of internal data usage.

The survey results showed that about 95% of the respondents were using data for their work. However, more than half of the respondents said that even though they wanted to use SQL, they didn’t have enough time to learn it or had difficulty generating SQL queries reflecting business logic and various extraction conditions. Also, they were concerned about the reliability of data extraction.

From the survey results, we found that we could potentially enable employees to concentrate on their work and facilitate data-based communication by solving this issue.

1.2 Solutions

To address the challenges employees were facing, which were identified through the survey, we set the goal of the product as raising employees’ data literacy. Data literacy refers to the ability to extract and interpret meaningful information from data, verify its reliability, draw insight from data exploration and analysis, and make reasonable decisions. The product aims to solve the challenges faced by employees to significantly enhance business productivity and maximize efficiency. The four core pillars of the product that are crucial in achieving its goal are as follows:

- Systemization: Establish a consistent data structure by utilizing table metadata from the data catalog, along with business terminology and verified data marts provided by the Data Service Group.

- Efficiency: Develop technology that understands Woowa Brothers’ business and improves the efficiency of internal data search capabilities.

- Accessibility: Use Slack, a workplace messaging tool, instead of a web-based method, to allow anyone to ask questions easily and quickly.

- Automation: Aim to provide an automated data service that can be used anywhere, 24/7 without the help of dedicated data personnel.

With the long-term goal of enhancing data literacy and the four pillars established for the task force, we set out to develop QueryAnswerBird (QAB), a personalized data analyst that can innovate employees’ way of working.

2. WHAT did we make?

2.1 Foundation technology of QAB

QAB is based on LLM, RAG, Langchain, and LLMOps technologies.

An LLM (Large Language Model) is based on a deep learning algorithm, one of the most popular models being OpenAI’s GPT-4o. This model can answer general questions but cannot answer correctly to questions that are specific to a particular company because it has not learned data specific to that particular company.

RAG (Retrieval-Augmented Generation) addresses this issue by saving and searching internal data to improve the answer quality of LLM. The model will search for the necessary information to answer questions instead of learning the data itself. Langchain is an open-source framework that supports the development of the LLM and applications to efficiently enable this technology.

Lastly, LLMOps (Large Language Model Operations) refers to the operation methods and tools for the deployment, management, and monitoring of LLMs. It optimizes various elements such as model training, tuning, version management, performance monitoring, response speed management, data security, and cost. This can be applied to LLM-related engineering areas without the foundation model.

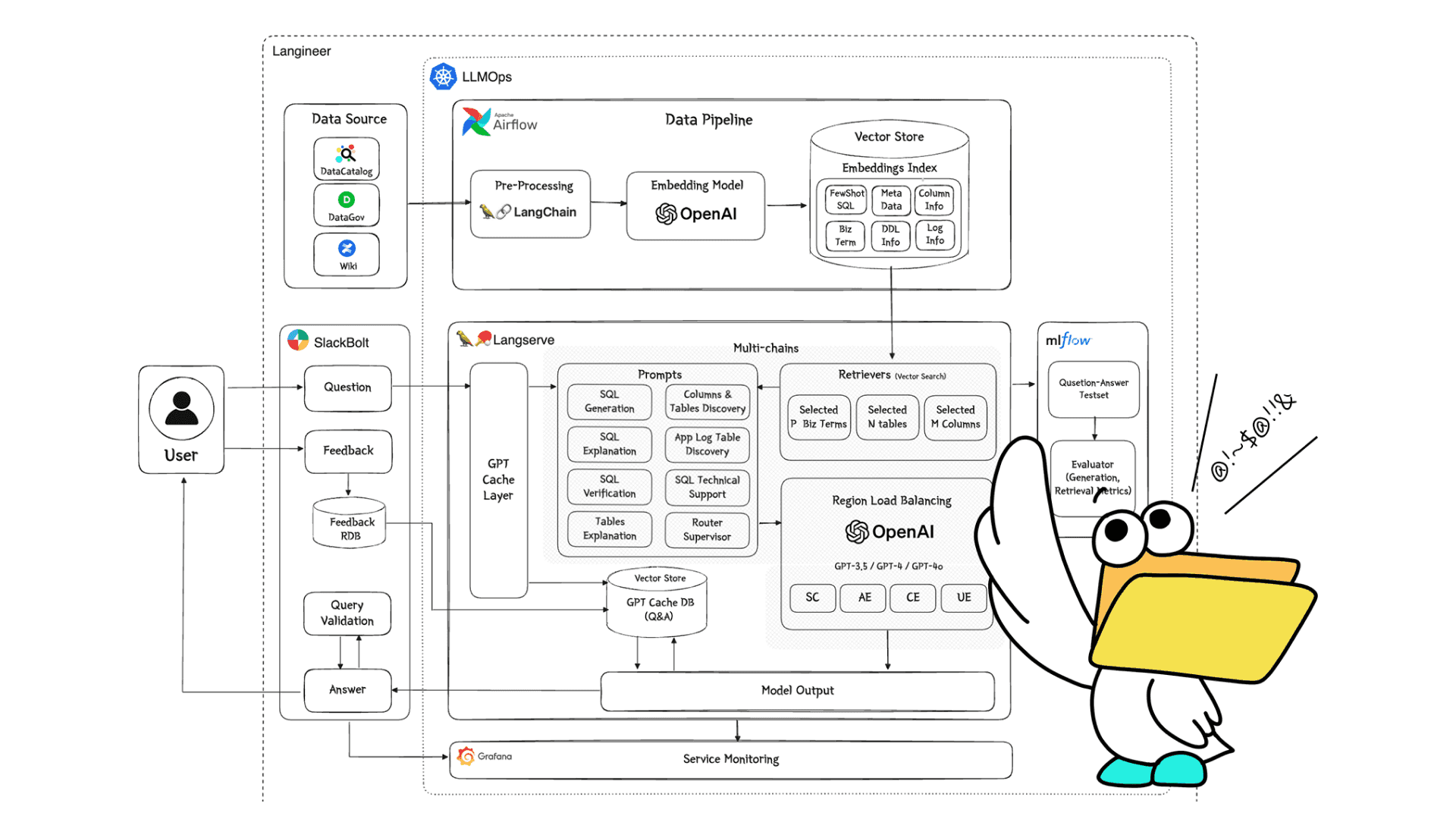

2.2 Development architecture of QAB

The initial version of the development from Woowa Hackathon 2023 was simple, using prompts and Microsoft Azure OpenAI’s GPT-3.5 API. However, we designed a new architecture because the initial version had limitations in achieving systemization, efficiency, accessibility, and automation.

(1) Establishment of an unstructured data pipeline based on vector store

- We established a pipeline that can automatically collect unstructured data such as business terminology, table metadata, and data extraction code to understand the vast domain knowledge of the company.

- We vectorized unstructured data through embedding and stored it in VectorDBs to enable vector similarity searches.

- We applied an embedding index by data area for efficient data update.

(2) Development of a RAG-based multi-chain structure to provide various literacy features

- When a user asks a question, the Router Supervisor chain identifies the purpose of the question and categorizes it into the appropriate question type in real time.

- The questions are then mapped to multi-chains (e.g. query generation, query interpretation, query syntax validation, table interpretation, log table utilization guide, and column and table utilization guide) that can provide the best possible answer to the user’s question.

- When executing multi-chains, utilize search algorithms for each chain to enable the retriever to selectively extract the necessary data.

(3) Establishment of LLMOps for the development, deployment, and operation of the LLM service

- We established an experiment environment for AB testing and deployed the best-performing chain with the help of leaderboards.

- We built features and environments to enhance the service operation quality such as API load balancing, GPT caching, feedback loops, and operation monitoring dashboards to ensure response stability, speed, and error handling.

- Service is automatically deployed through CI/CD.

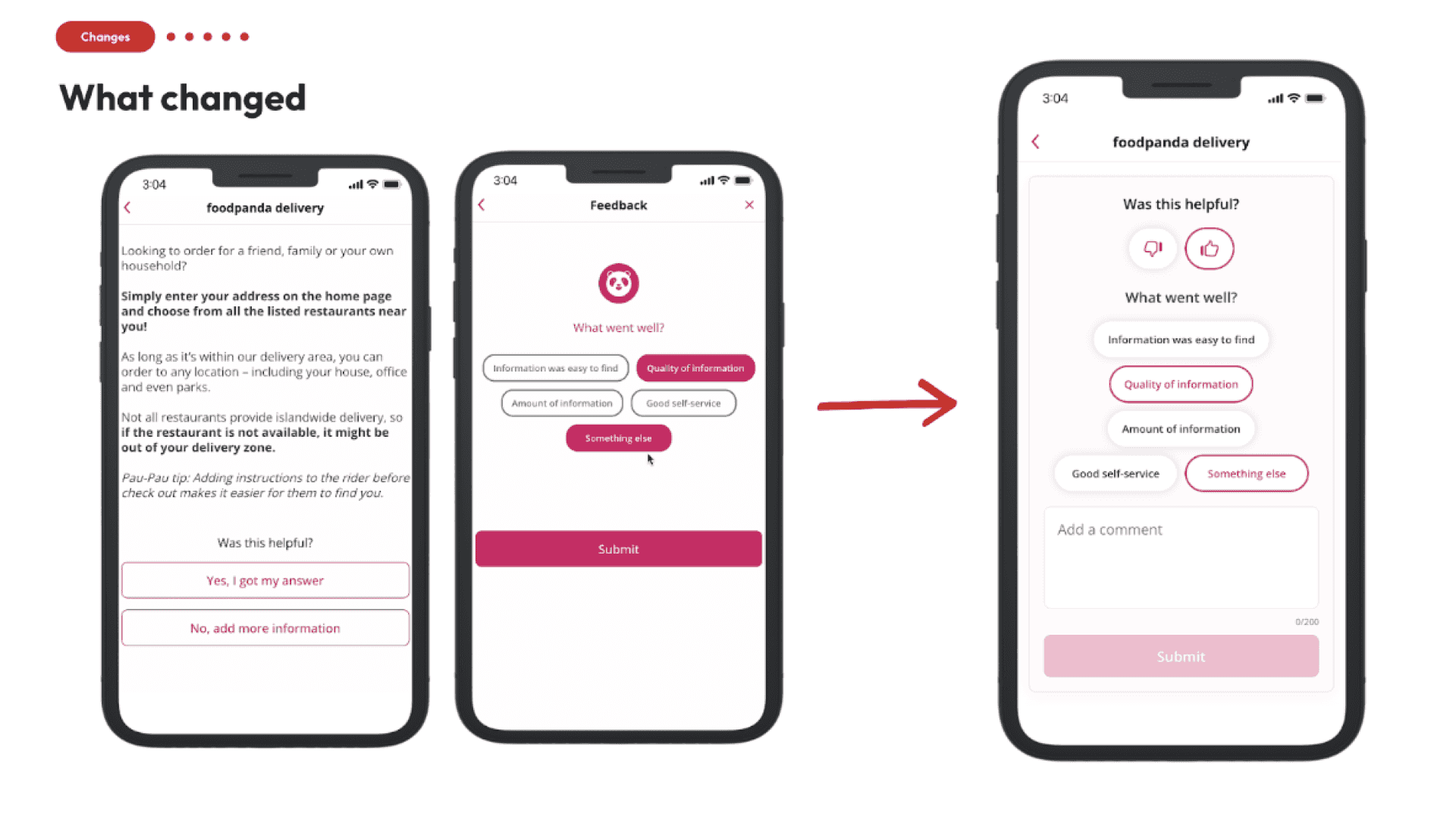

(4) Implementation of various response features based on Slack

- Employees can easily ask questions and receive answers anytime on the Slack application.

- Answers can be evaluated (satisfied/unsatisfied), which will be reflected in the GPT cache, expanding standardized data knowledge to other users.

- For query generation, the answer also provides an additional description of whether the generated query executes correctly or contains errors by validating the query.

With the interconnection of the technologies mentioned above, QAB can provide high-quality responses consistently.

2.3 Character design of QAB

Other than the technical aspects, we believed that it was important to include an element that helped users feel connected to QAB, rather than repulsed in the process of using it to find the right answer because it felt too much like talking to a robot. Also, we wanted to create a character so that when the responses are inaccurate, it doesn’t become a negative experience but rather makes users feel like they are working together with a friend to solve a problem.

Woowa Brothers’ AI data analyst, QueryAnswerBird (QAB) was born with the help of the Design Group, who are serious about character design. Ask anything and QAB will bring you the answer from the vast sea of Woowa’s data. The character has a cute nerdy image, combining a computer folder with a pelican. Although it looks cute and charming on the outside, it is composed of complex systems on the inside.

3. HOW did we work?

We worked in short sprints since task force teams have to achieve goals with limited resources in a specific period. We divided the development roadmap into three steps, running two-week sprints for each step.

It was difficult to clearly understand each other’s strengths and interests since no one in the team had experience in developing LLM-based products. To address this issue, we separated the tasks into components and rotated the tasks for each sprint. Team members were free to choose which tasks to work on.

This approach may be slow due to overlapping tasks in the initial stages, but the work speed will pick up as the sprints are carried out repeatedly. This is because as the team goes through task cycles, each member will find their area of interest and the tasks that play to each member’s strength will be assigned to them as their main tasks.

This helped the Task Force members to stay motivated and keep delivering great performance even with the highly energy-consuming nature of task force work. Also, by going through rotations, members were able to obtain a wide range of skill sets and have a better understanding of each other’s work, naturally leading to stronger teamwork.

Now let’s go into the details of QAB’s development.

4. HOW did we build Text-to-SQL?

For the first two months, we focused on the keyword Text-to-SQL (Structured Query Language). Text-to-SQL is a technology that converts natural language into SQL queries and is one of the key features of QAB. SQL is critical for data-based decision-making in business and a must-have skill if a user desires to handle data freely.

In general, LLM models like GPT-4 can generate high-quality SQL queries. So, how can we build a Text-to-SQL feature that reflects various contexts and knowledge of the company by just using GPT?

This is a tricky question. Although we can generate queries using only GPT-4, the queries lack quality to be used for work because they lack knowledge of the domains and data policies of the company, and include unnecessary data due to the default retriever’s lacking performance. Another problem is the inconsistent quality of the generated queries due to the inherent problem of LLM, hallucination. Even though the queries may seem fine, they are not usable. This indicates that while the quality of responses may improve to a certain extent as the performance of the foundation model progresses, there will ultimately be limitations.

How can we solve this problem? We added a feature to QAB that generates LLM responses based on domain knowledge using DocumentLoader, Vectorstore, and RAG QA provided by LangChain. Then we developed a new structure focusing on the following four elements: data augmentation, development of a search algorithm, prompt engineering, and establishment of a system for testing and evaluation.

4.1 QAB’s Text-to-SQL feature

Let’s have a look at QAB’s Text-to-SQL chain area.

(1) Data augmentation

You might be familiar with the phrase “garbage in, garbage out”, which is generally used for LLM as well. To enhance the performance of GPT-based Text-to-SQL, the most important thing is to collect the right documentation. Data Ambiguity Strikes Back: How Documentation Improves GPT’s Text-to-SQL, a paper presented at the NeurIPS 2023, covers the importance and methods of improving data ambiguity.

Based on this, we came up with ways to enrich table metadata and performed more advanced metadata generation tasks than before. While the existing table metadata was well structured, we added detailed information that had not been recorded previously, such as the purpose and characteristics of the table, detailed descriptions of columns, key values, and keywords. We also listed commonly used services and related questions. Based on the table metadata, we were able to generate richer table DDL data than before. For the collection of table DDL data, we were able to easily add information and automatically collect the newest data through API, thanks to the well-established internal data catalog system.

Users’ questions are full of various business terms that only employees can understand. It is important to standardize and manage such business terms to prevent any miscommunication because they may be used differently for each service and organization. Woowa Brothers has an organization managing data governance, which also manages data and business terminology. A business terminology glossary dedicated to Text-to-SQL was created using the existing standard business terminology glossary.

Lastly, we built a few-shot SQL example data. Few-shot method refers to an approach where several example responses are inputted into the prompt. This helps the model to learn various information needed for generating a more accurate and relevant response to the user’s questions. This plays a crucial part in feeding domain knowledge to the queries, so the higher the quality of the example queries, the better the responses will be. Therefore, we collected example data such as high-quality queries generated in advance by data analysts and additional queries about key business questions. Also, we built a question-query dataset by writing questions corresponding to each query. Few-show SQL example data must be managed by data analysts specializing in each domain because of the ever-changing data extraction criteria and rapidly evolving business landscape. We plan to establish a cooperation system with data analysts of each domain to communicate seamlessly and manage data.

The mentioned data is collected daily through an unstructured pipeline. Therefore, it is possible to automatically collect the latest data policies, which are changing constantly, quickly verify and reflect them in our services. Moreover, as data volume continues to increase, we built an environment that updates only the changes by applying indexing to VectorDB to improve the speed of data updates.

(2) Development of a search algorithm

When using prompts, it is important to input data using the right search algorithms for each user question and stage. When a user question is ambiguous, short or unclear, refining the question can solve the problem.

Understanding business terms is essential when narrowing down a question, so extracting appropriate terms relevant to the purpose of the question is crucial. Extraction of similar yet irrelevant terms can lead to the LLM generating incorrect questions, so a sophisticated search algorithm that can fully understand the intent is required at this stage.

When extracting the information necessary for generating queries based on the specified question, various types of information such as table and column metadata, table DDL, and few-shot SQL are used. Extracting the most relevant information from the vast amount of information to answer the user’s question is crucial at this stage. In other words, it requires understanding the context of the user’s question and combining various search algorithms such as extracting the most relevant information or filtering for specific keywords.

Likewise, for few-shot SQL examples, it is important to select the most similar examples to the question and add similar examples if there are none. By inputting the combined input values of each stage into GPT, we can generate high-quality query responses with reduced hallucination risks.

(3) Prompt engineering

Prompts can be divided into question refinement prompts and query generation prompts but both use a common element.

First, both types of prompts are given the persona of a data analyst. This requires thorough discussions about the desired role and result since the quality of the response can change depending on the given persona.

Next, let’s go over the structure design of the prompts. There are various ways to design the structure of prompts. According to the paper ReAct: Synergizing Reasoning and Acting in Language Models, published at the ICLR 2023, the LLM-based ReAct method outperformed imitative learning and reinforcement learning in terms of response quality in various benchmarks. The ReAct method is divided into sequential reasoning (chain-of-thought, COT) for problem-solving and tools or actions for performing specific tasks. When these two elements are used together, it creates a synergy effect and generates a more accurate response than using only one type of method.

We applied the ReAct method’s idea to develop QAB’s query generation prompt. This prompt goes through a step-by-step reasoning process (COT) to generate the appropriate query for the user’s question. Also, it dynamically searches and selects the appropriate data for the question. The response becomes increasingly sophisticated as the prompt goes through the combined process of reasoning and searching, enabling a more accurate response than using just a simple reasoning method.

Other various prompt engineering methods are also applied and we are gradually enhancing the Text-to-SQL feature’s performance through continuous testing.

(4) Establishment of a system for testing and evaluation

There are various leaderboards that people around the world participate in to evaluate and compete in Text-to-SQL performance. The best-known leaderboards are YALE Spider and Alibaba BIRD. There’s also RAGAS Score which pursues metrics-based development for the continuous improvement of LLM and RAG application.

The shared aspect of the evaluation methods and metrics mentioned above is that they evaluate the Text-to-SQL performance based on evaluation data and metrics, allowing them to assess the current status and make improvements.

However, there are limitations when it comes to solving various business problems within the company using publicly available metrics and leaderboards. In particular, it is challenging to address domain-specific issues that are not general issues, and it is difficult to update key metrics that are considered important based on the business situation. To solve this problem, we developed our own evaluation metrics and data that serve as the foundation for measuring the internal Text-to-SQL performance by benchmarking existing leaderboards and metrics.

We conducted step-by-step tests to enhance the Text-to-SQL feature’s performance by using the evaluation metrics and data we developed. Testing phases range from evaluating the understanding of query syntax to evaluating the accuracy of query execution results that incorporate complex domain knowledge. Currently, we are testing to evaluate how well the Text-to-SQL feature understands complex domains and how accurate the query execution results are, while also incorporating users’ questions to make improvements.

We built an internal leaderboard and conducted more than 500 A/B tests to bring our individual ideas to life. In particular, the ranking of individual results added a gamification element, which made it more enjoyable to participate. The highest-performing results were approved during weekly sync-ups and then deployed to production, gradually enhancing service performance.

Moreover, it is possible to quickly check prompt modifications or changes in the chain’s performance by using Langserve Playground.

4.2 Query generation and interpretation feature of QAB

We were able to develop a query generation and interpretation feature in just two months through QAB’s basic architecture and Text-to-SQL feature. This feature provides users with responses within 30 seconds to 1 minute, offering high-quality queries that can be referenced for work.

Employees who newly joined the company or are in charge of different service domains say that QAB’s query generation and interpretation feature helped them greatly in better understanding their work. However, there was some feedback saying that it has room to improve in terms of the accuracy of the business logic and understanding of the question. To deliver more accurate information, we are currently carrying out tasks to improve QAB’s performance through various methods and tests, taking into account different feedback and question histories.

5. Concluding Part 1 on QueryAnswerBird

As we gradually increased the number of test participants and target organizations, we realized that a significant proportion of the questions was about data discovery, in addition to query generation.

Data discovery is about exploring and understanding table columns, structures, and types to derive meaningful insights and to reflect them into business intelligence (BI) reports. To make this possible, it is necessary to not only generate queries but also answer various data-related questions. This is why we decided to expand QAB’s features beyond Text-to-SQL to cover data discovery.

While part 1 of the blog post focused on introducing QAB and the progress of building its Text-to-SQL feature, part 2 will cover the data discovery features of the product and future plans in detail.

Please note: This article is an English translation of BADA(Baemin Advanced Data Analytics) team’s blog post titled “Introducing the AI Data Analyst “QueryAnswerBird” – Part 1. Utilization of RAG and Text-to-SQL” published in July 2024.

If you like what you’ve read and you’re someone who wants to work on open, interesting projects in a caring environment, check out our full list of open roles here – from Backend to Frontend and everything in between. We’d love to have you on board for an amazing journey ahead.