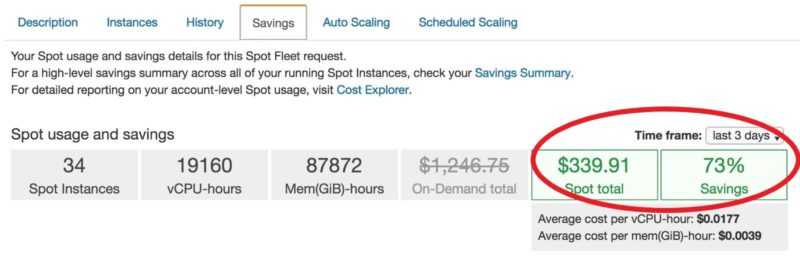

My name is Gabriel Ferreira and I’m working as Senior Principal Systems Engineer at Delivery Hero, leading the Global Logistics Infrastructure Team. In the midst of a cost-cutting project, we asked ourselves: why not run our kubernetes nodes over AWS Spot Instances? Since spots instances are 70% cheaper than on-demand, it would definitely save us some money.

Watch Vojtech Vondra, our Senior Director for Logistics, who presented the this approach at the AWS Summit in Berlin this year.

So, when we kicked off the project, the first question we asked ourselves was: ”How do we accomplish this?” Now, more than a year later, our production environments are fully operating in a stable and cost-efficient way on Spot Instances, with around 200 Nodes running in 3 AWS regions.

Without going into too much technical detail, I will share the idea behind our setup, how we implemented it and why we have done it in this way. Feel free to reach out to me if you are interested in a more in-depth explanation.

Before going ahead, let me list some key points necessary to have a stable environment with Spot Instances.

- Spread the risk, Multiples ASG, Instance Types and AZs.(*EC2 SPOT FLEET*).

- Work with oversized/free spots in your clusters

- Keep in mind that Spots Instance can and will be terminated, and would not be good having to wait for the new machines to be started.

- Be ready for the worst case

- Example: fallback ASG with on-demand instances. You can set up the minimum to 0. This will be scaled if necessary by the cluster-autoscaler.

- Have reliable applications

- Your apps must start and stop properly – simple as that.

Our First Try

Our first setup was basically a default kops setup with multiples spot ASGs, like this: (Ignore Min/Max values since this is only an example).

Apart from the kops setup, the only extra app that I would say is mandatory is the kube-spot-termination-notice-handler. Basically it’s a DaemonSet that runs on each spot instance pulling over c2-spot-instance-termination-notice url and draining the node when receiving a http 200 (That means that this spot node will be terminated). In the background there is basically a “kubectl drain ${NODE_NAME} — force — ignore-daemonsets” being executed.

This setup was “running fine” for around 4 months and then we started to see that in some regions, like ap-southeast-1 (Singapore), the termination rate for some instance types (in this case r4) versus other regions were terrible. Even though most of the time we had no spot termination, sometimes we had 3 or 4 nodes dying at the same time, and this was causing some noise during peak time.

Our Current Setup

After a quick search we found Spot Instance Advisor. This was a game changer in terms of stability.

Even though this functionality is still not supported by kops, with a simple copy/paste from ec2-user-data (or some automation) you can create spots-fleets and let it manage all spot nodes for you. Spot Instance Advisor will know what is the best instance type and where the best place is to start it for you. In the end you define a pool of resources (instances types and AZs) and Spot Instance Advisor will take care of everything else.

After that, our terminations rate decreased drastically, considering that we now have spot nodes running for more than 90 days in some regions/availability zones.

Coming back to the kube-spot-termination-notice-handler script, with just a small change, send CPU_usage function (kube-spot https://github.com/gabrieladt/kube-spot-termination-notice-handler), where we used to pull Requested CPU and MEMORY from spot nodes and push them into CloudWatch. Later, these custom metrics can be used by Spot Instance Advisor to scale up and down the nodes.

After a quick search we found Spot Instance Advisor. This was a game changer in terms of stability.

By employing this method, you can stay “over provisioned”, which is important for spot instance setup or even regular setups. We are now working with at least 20% free CPU resources, since our apps are mainly CPU based. This means that 20% of the cluster can be “decreased”, while still having “rooms” available inside the cluster, and saving money.

Questions? Feel free to reach out to me on LinkedIn. If you have ideas how to improve this and you want to join a great team, have a look at our current job opening.