Dive into the life of one of our backend teams at Delivery Hero. From tackling technical challenges to refining processes, see how we’ve grown and achieved high efficiency over two exciting quarters.

So, first things first, the team that we work for is called “Product Discovery Core”. I’m Dmitrii, a Senior Engineer in the squad, and I’m writing this with Arjit, who’s the Engineering Manager of the squad. And as the name implies, the variety of things we are responsible for is quite extensive. We handle a ton of responsibilities as a team, providing essential product data and enabling other teams. Our services are crucial to user flows, so we’re always in touch with stakeholders and owners for our various upstream and downstream dependencies. All these factors shape how we operate and the practices we use.

Engineering Excellence

Handling critical flows means we face some serious engineering challenges. At Delivery Hero, every team adheres to our internal reliability manifesto and guidelines. For us, this means every tiny change we make and every architectural decision we make must be not only functional but also highly performant and aligned with strict SLA and SLO commitments. And to ensure that we’re not afraid of deploying on Fridays, you know?

To give you a taste of it, let’s look into a recent project involving one of our business-critical systems.

Example: Grocery Products Data System

One of our key systems processes all grocery data on our platform. This service acts as a GraphQL API-aggregator gateway, hiding the integrational complexity and serving as the single source of truth for our client engineers. Having a service-aggregator layer means dealing with many moving parts. Each external dependency is a potential point of failure, adding latency and affecting overall system stability.

All these aspects directly affect the system’s SLO and SLA. Having a high-quality, performant solution becomes inevitable for our system. To achieve that, every engineer in the team needs to know the best practices, such as Circuit Breaker patterns, multiple caching layers, graceful degradation and retries mechanics and how to use and configure them correctly.

Monitoring and Observability

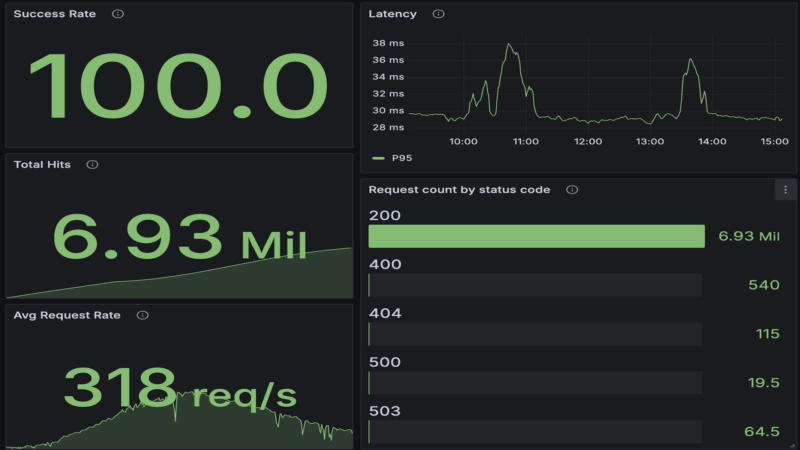

To ensure the system’s stability and performance you need to, of course, find a way to monitor and measure it first. For our team and for the mentioned API-aggregator gateway, building a reliable and trustworthy monitoring system was a challenge.

The graphql api-aggregator gateway had previously an observability system, which heavily used the service’s logs to build all the metrics and SRE golden signals. As practice showed, having the monitoring system based only on the logs did not satisfy all the observability and reliability requirements we have in DH. One of the reasons for this was that service logs are limited by the application layer and don’t provide enough visibility into requests as they flow across service boundaries. For the gateway aggregator, which doesn’t contain a lot of logic and communicates with upstream dependencies most of the time, such visibility is essential.

During production-level incidents, the lack of reliable and precise metrics and alerts led to higher mean time to recover (MTTR) and mean time to detect (MTTD) than our standards allowed. To fix this, we rebuilt broken parts of the monitoring system from scratch. We introduced new custom metrics, set up new alerts, and revamped our dashboards.

One of the challenging things for us was also the fact that the aggregator service was a GraphQL-based service. The GraphQL specification standards, the functioning of GraphQL APIs and the propagation of clients’ response errors became another engineering challenge we had to address in our new observability system. But that did not faze us at all – we rose to the challenge and did a phenomenal job. 😎

In the end, the new custom metric system was successfully delivered, which made the lives of the on-call engineers a whole lot easier, while improving the relevant metrics.

Refining Processes

Apart from technical skills, every engineer in our team needs to understand the business domain and how our system fits into Delivery Hero’s products. Keeping everyone on the same page is crucial. One of our favorite ceremonies is the bi-weekly internal operational review meeting. During these meetings, we review incidents from the past two weeks: discussing their causes, impacts, and most importantly, our learnings.

The best part? We don’t just review incidents caused by our services; we also look at incidents from dependent systems. This ensures every team member understands how the system functions at all levels and how everything is connected. We then update our runbooks and documentation with these learnings. This process helps us react quickly during incidents and makes onboarding new team members an easy task, too.

Staying Aligned Across Time Zones

Something that we as a team have learnt and aligned on is how quickly alignment can decay. And that has forced us to continuously evolve in our ways of working.

One of the challenges we have is that the entire team, including stakeholders, is distributed across multiple time zones. For our team’s specific needs, we decided to rely on asynchronous communication. While we remain agile and work in two-week iterations, we don’t follow all the standard agile ceremonies in their traditional format.

For example, instead of doing classic daily stand-ups, we use a dedicated Slack channel where every team member posts an update. When there’s a need to discuss something in more detail, we use a dedicated block of time. That can be used for any discussion or information sharing. This works well for us since there is more than a 7 hours difference between some of the team members.

Also, apart from providing updates, the Slack channel is used as a form of a brag-doc, since it contains all information on what every team member worked on during a specific quarter. It makes life much easier during the performance review season.

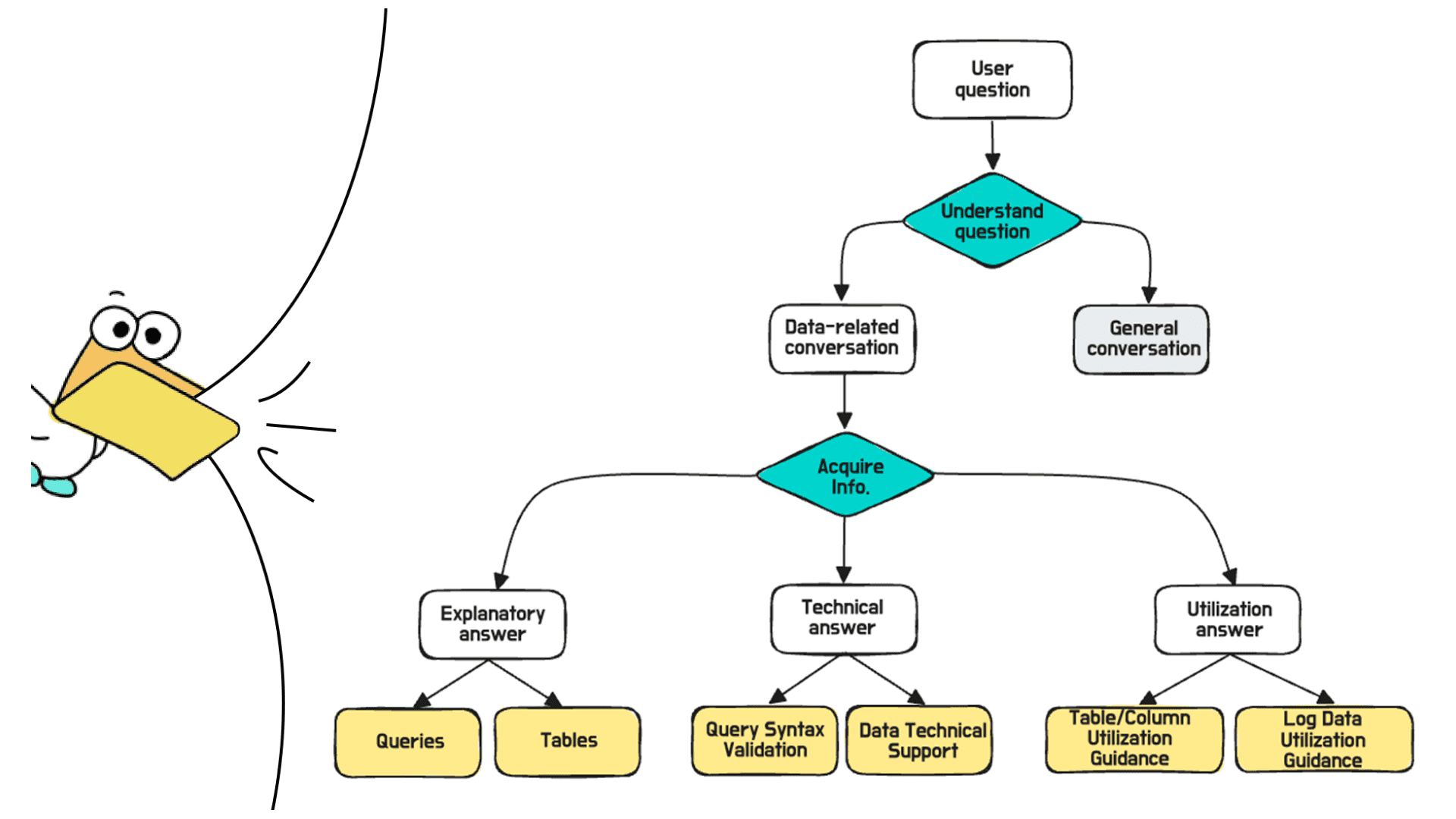

Additionally, the team has a concept of “Hero of the Week” (HoW). Every week, each team member (including Arjit and me) takes responsibility for being a Point of Contact for the whole team with stakeholders. Anyone can reach the current HoW via the team’s public Slack channel to make a request, report a bug or ask a question. The team also uses it as a quite valuable way of additionally documenting decisions being made. Keeping all discussions public on Slack allows us to use the internal GenAI Documentation Bot solution that Delivery Hero uses to help people find related documentation and information.

Conclusion

Reflecting on our journey, it’s amazing to see how much we’ve grown as an engineering team. From tackling technical challenges to refining our processes, every step has been a learning experience. We’ve become more efficient, more reliable and more cohesive as a team.

We’re committed to continuous learning and innovation and we can’t wait to see where this journey takes us next. Thank you for joining us!

If you like what you’ve read and you’re someone who wants to work on open, interesting projects in a caring environment, check out our full list of open roles here – from Backend to Frontend and everything in between. We’d love to have you on board for an amazing journey ahead.