Are you interested in designing a more inclusive app? This article shares accessibility insights from working with people who are blind.

How does someone who’s blind “see” a screen?

Don’t worry, you’re asking the right questions.

The answer is screen readers. These are used to read the elements and text on a screen out loud. For example, iOS has “Voiceover,” and Android has “Talkback.”

Let’s check out an example:

Breaking down three accessibility principles

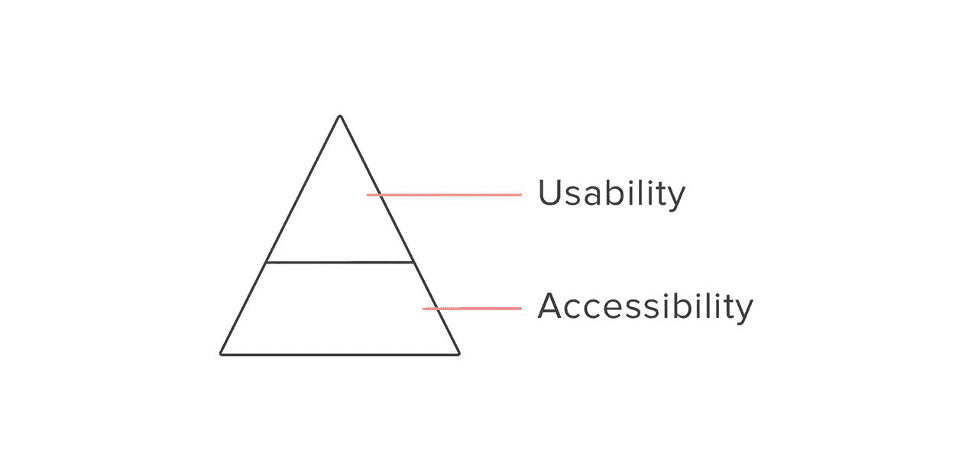

1. Accessibility ≠ Usability

Products can be accessible (information and options can be accessed), but not usable (easy to use).

Notice that in the video demo, the share button was labeled only as a “button.” That’s an inaccessible button because it does not tell the screen-reader user what it’s for.

Accessibility Examples:

- All elements and text can be read by my screen reader.

- The elements are labeled, so I understand what a button is for.

- The elements can be interacted with, so I can click on a button.

Another observation is that the Facebook stickers were appearing in the keyboard area, whereas the GIFs were appearing above the keyboard area. This is inconsistent behavior that makes a product less easy to use.

Usability Examples:

- Making a product as simple as possible, especially your main call to action.

- Creating clear information architecture and UX writing, so that users know where to find things more efficiently.

- Streamlined flows are very important. If Facebook hadn’t refocused voiceover to the first sticker in the list, an un-tech savvy screen-reader user would say, “Okay… I tapped on stickers, but nothing happened.”

Considerations:

- What’s more usable for your grandmother is different from a tech-savvy blind teenager or yourself. If you want to open up your product to most people out there, it’s best to make your primary call to action as usable as possible for the least tech-savvy person. On top of that, you can then build in ancillary (advanced features) for tech-savvy users.

- Of course, not every situation is going to be clear cut. It’s okay to make usability trade-offs to service your primary audience. But if you can open additional pathways for other audiences, while not significantly taking away from your primary audience’s experience, why not do it?

2. Scanning: From part to whole

When visiting a website, a sighted person scans the page by seeing the whole screen, then zoning in on what catches the eye (e.g. a big red sale banner). Someone who is blind, on the other hand, is used to starting from a part of the screen, then gradually discovering the whole screen.

This principle is important when thinking about reducing clutter in a digital product and making sure your call to actions are easily in reach – especially in a task flow.

An example is shown in the mobile interface below, where a user is in the middle of a task flow: inviting friends to use a product.

Notice that there are two “Invite” buttons: One small button on the top right corner to the right of the heading, “Invite Friends.” And the other big button fixed at the bottom of the screen.

- The small (tertiary) button is easily in reach for someone who is blind, without having to swipe through their entire contact list to find the big button.

- The small (tertiary) button is easily in reach for someone who is blind, without having to swipe through their entire contact list to find the big button.

3. Thinking in a linear sequence: From top to bottom

The way a screen reader reads a screen is very linear, especially on mobile. The focus on screen readers normally starts at the top of the screen (i.e. the heading), and as you use your finger to swipe from left to right, you hear all the text and elements on the screen. While there are other methods, this is the most basic way the average user would use a screen reader on mobile.

This principle is important when thinking about the voice UI hierarchy — the order of information read out loud — when focusing on an element. It’s good practice to translate visual hierarchy into auditory hierarchy, from most to least important.

In the example of a list item below, a user is searching for a list of nearby store locations, and lands on “AT&T.”

How screen readers would normally read from left to right:

- “10 mi, AT&T, 8657 Villa La Jolla Dr #313, La Jolla, CA, 92037, USA, 20.”

If you customize it to make it more usable, it would read:

- “AT&T, 20 nearby locations, nearest one is 10 miles away on 8657 Villa La Jolla Dr #131, La Jolla, CA 92037. Button. Double-tap to see all 20 locations.”

Imagine if every list item started with the address? Scanning a list of locations to find the location name you want would be painfully slow.

What does an accessibility-first design look like?

Now that you understand more about what a mobile app looks like to someone who is blind, below is an example of how a lo-fi wireframe is optimized for screen readers before being translated to hi-fi mockups.

What’s the secret? Keep it simple, because that’s what makes it usable.

What can you do next?

Test your mobile apps by closing your eyes and listening to the screen reader. Does the information architecture make sense to you? Can your friends successfully complete your main call to action?

To test on iPhone: Ask Siri to “Turn on/off Voiceover”

To test on Android: Ask Google Assistant to “Turn on/off Talkback”

Thanks for reading and caring! Here are some key takeaways:

- Accessibility ≠ Usability. For example: If tapping on a button causes the screen to visually change, someone who is blind will need a way to know that in order to know what to do next.

- Scanning: from part to whole. For example: If a screen reader user is in the middle of a task flow, and they must infinitely scroll to find the call to action button, perhaps we can find ways to make that call to action easier to reach.

- Thinking in a linear sequence: from top to bottom. For example: When it comes to an element that contains different information, the voice UI hierarchy should read from most to least important.

Danielle is a Product Designer at Delivery Hero. Apply to Delivery Hero’s current product role openings at: