How the SRE team in our Global Discovery tribe developed an abstraction layer over Terragrunt to manage infrastructure at scale efficiently. This is an explanatory walkthrough of our internal tooling that enables developers to become self-sufficient.

Managing and scaling the search engine of a multinational company that operates in 70 countries worldwide is a significant challenge. Delivery Hero Search and Discovery SRE supports 12 developer teams and manages the infrastructure consisting of 70+ GCP projects, 160+ GKE clusters, 90+ Database instances, underlying networking, and IAM access with a team of 10 engineers.

This post describes how we manage to fulfill all these requests from teams that have very different needs, require updates to take place based on their own business roadmaps, and want to have control of their infrastructure as much as possible.

Our tech stack

First of all, let’s explain what kind of infrastructure our team is managing. We are heavily based on Kubernetes, so we have developed a collection of Terraform modules that the teams can use in their projects. We have modules for the GCP project essentials, the Kubernetes cluster, the cluster’s node pools, service accounts, storage buckets, databases, and a few others. In total, we are maintaining 30 Terraform modules.

To help us keep our software DRY (Don’t Repeat Yourself), we are also using Terragrunt. With Terragrunt, we are reusing the same Terraform modules, we have configuration files per development team and per GCP project. Using inheritance we avoid redefining the same configuration again and again.

Our endeavours to be DRY could end here. For each team, for each project they manage, we have a directory infrastructure that looks like this:

Each module lives under its own directory, so it has its own state, allowing changes to be as independent as possible, reducing the blast radius of changes. This is a design choice we made as SRE based on the number of resources that we have to manage. Our goal is to preserve the same directory structure across all the infrastructure-as-code we have. We need to ensure that the infrastructure is uniformly configured everywhere, enforce our security configurations, and run our SRE workloads in all clusters. This means that the above modules need parameterization both from us and the developers, which creates a great challenge. We need to be able to massively update the infrastructure across teams, while at the same time, we allow them to control what belongs to them. In the next section, we analyse these challenges and our possible solutions.

Devs as clients

Each different development team comes with its own needs, different kinds of applications, and different technology stacks, and they also want changes constantly. So imagine we have the following three cases:

These are just examples since lots of things can be parameterized and change from team to team and from project to project. The first approach would be to create a template for each Terraform module that we manage. The developers could grab that template, place it under their repository, and start populating the input variables, so they have the infrastructure they want. How would you approach the creation of a new project? You would copy and paste a template and then populate the variables. The template could look like this:

This is where the following challenges start to appear:

- How do you enforce the same directory structure across all projects? When you update this structure in your templates, how do you also make sure that existing projects will adopt that new change? You need a mechanism to enforce the adoption of newer versions across all new and old projects.

- How do you update variables managed by the SRE across all projects? For example, if you want to migrate all your clusters to a newer version of Kubernetes master, or if you want to move from Dockerd runtime to Containerd, how do you handle the updates?

- If your Terraform modules have new security updates that all projects need to use, how do you enforce the adoption of the newest module version and make sure that nothing is left behind?

Even if we consider that developers are always checking for a new template version, we still have some challenges to face. How can we, as SRE, monitor which teams have adopted our template changes, and if they are using them correctly?

We considered the complexity of these challenges and we decided that we needed an extra layer of code management. We had to create a solution that would manage the directory structure, the templates, the modules’ versions, and the validation of the configuration, and -at the same time- it would allow complete parameterization from developers.

The solution: A CLI that does tricks

The requirements were the following:

- We should separate the developers’ variables from the ones managed by the SRE, so the developers can’t affect the base SRE configuration

- Developers should be able to describe their infrastructure requirements easily and in one place

- The SRE should be able to massively update projects and perform concurrent security updates and configuration changes

- The SRE should manage the directory structure, the remote Terraform state, the extra modules that need to be added to all projects, and -of course- their versions

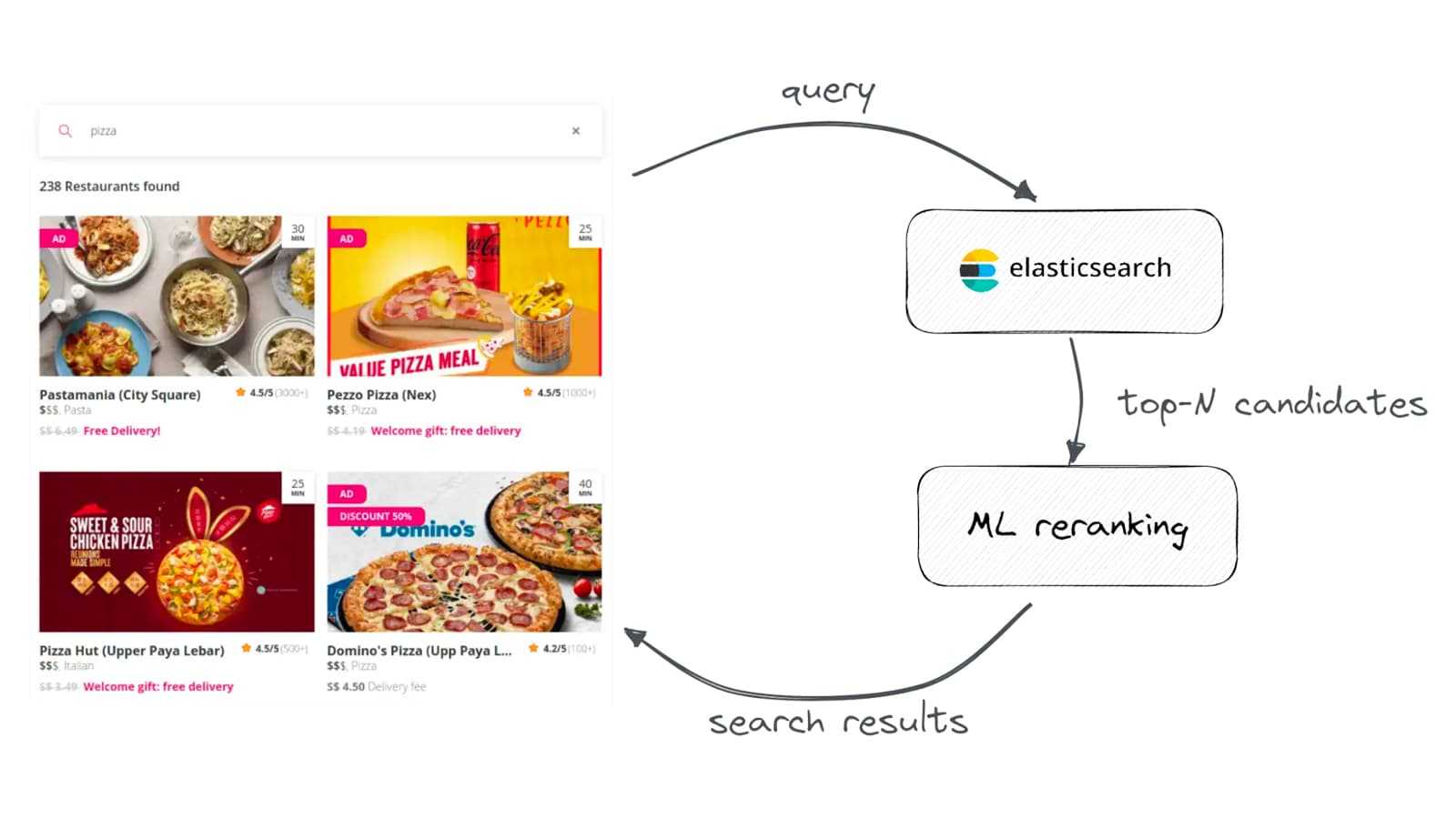

So what if we write a simple CLI that reads the teams’ configuration and produces the desired Terragrunt files filled with our SRE configurations as well? The idea was simple enough: Each project will be described by a configuration file, written in YAML in our case, and there the teams can define all the clusters and other modules they want for their GCP project. Then this file will be parsed by our CLI tool and combined with some templates (maintained by the SRE) to generate the final files that will be used to describe our infrastructure. This process can be summarised in the following schema.

Let’s explain what each component does.

The Python library

The library exposes a CLI interface that does a few simple things. The most important command is called “init”, and it accepts a YAML configuration file as input. It combines it with our internal Jinja templates we have for each module and generates an HCL file for each module. It also generates some standard HCL files for each project that define some standard variables, like project name, project ID, etc. These files are inherited from each module so we can be DRY.

Instead of shipping the Python library as a private package, we have dockerized the library. When the SRE make changes to the templates or the Python library we are also rebuilding the Docker image through CI/CD and pushing it to our private image registry. The SRE are responsible for pulling the latest version of this image before they regenerate the files for a project, so they have the latest templates in place.

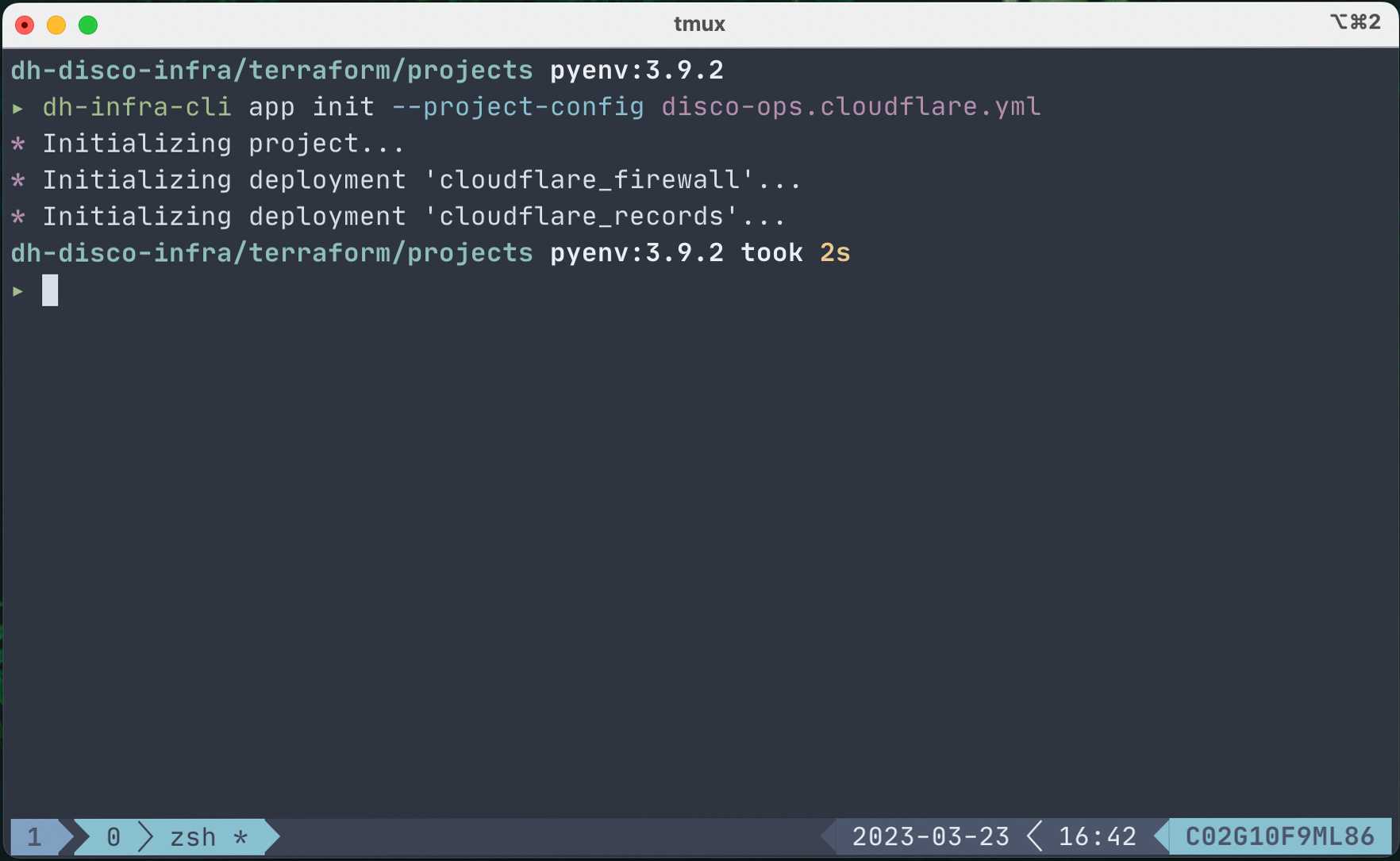

The init command looks like this:

The produced file structure is exactly the same as shown above, but this time, it’s automatically generated instead of manually written. We have now minimized the possibility of having errors in the file structure.

The first thing that this command does is read the project YAML file and validate its format. We are doing this by using Python’s Schema and its utilities. This way we can make sure that all required variables are set and that they have the correct type and format.

Then the tool iterates through all items under the “infrastructures” section of the YAML file. For each item in the list, it tries to find a Jinja template based on the module’s name, and if there is none, it just uses a default one which just uses a Terraform module’s URL, defined in the YAML file, and combines it with all input variables. The Jinja templates are part of the Python library and we analyze them below.

The Templates

Each Jinja template makes sure that modules are correctly initialized, defines defaults for variables, loops over data structures, and translates the YAML file structure to the HCL one. Let’s take a look at one of our core modules, our GKE cluster one.

The templates also contain something very important, the default version of the module to be used. This is a key feature for massive updates. We will explain this later on.

Using templates, we can guarantee that all the HCL files are now valid, minimizing human errors even more. We can still have missing variables or incorrect variable types, but not invalid HCL files.

The configuration YAML files

We document the format that the configuration files should follow. The modules which can be used are a few dozens but for the sake of simplicity, we will just limit our example to a Kubernetes cluster, a NAT, and a VPC.

In this example we have three sections. The first section is “project”, where we define all the reusable variables that will be inherited by all modules. Then we have “deployments”, where we list all the applications that we will deploy in a cluster. Practically it’s a list that matches the cluster by using the “cluster_name” key. This functionality could be better explained in detail but it is out of context for this article. Then “infrastructures” has a “modules” child, which is a list that includes all of the modules that make up our infrastructure for that project. The “template_name” is the key that chooses the template, and then you can further configure the module by adding any input variables.

Continuous deployment

When the YAML files are created/edited and the “init” command is run, we commit all these changes and open a pull request. We use Atlantis in order to plan and apply our infrastructure changes. The YAML files are now the single source of truth for a project. We have managed to map a simple, small, readable YAML file to our live infrastructure.

The result

Now that we have presented all the pieces of the puzzle, let’s see how it works in action and what use cases it helps us with.

Onboarding a project

When a development team needs a new project, all they need to do is to run “> sre-cli infra setup –project-name <NAME>” and the CLI will generate a file using a template that will also populate the project name based on the parameter. Then the developers can edit the file to define variables like regions, clusters, machine types, and all these things that change from project to project. Then they run the “init” command and voila! Their HCL files are there. It’s time to commit all files and open a PR. The PR is later reviewed and approved by the SRE. A simple GitHub comment “atlantis apply” is posted and the infrastructure is created!

The PR review doesn’t need to:

- Validate the files structure since it’s auto-generated

- Validate the auto-generated file contents

- Check if the latest module versions are used since they are generated by the templates

- Check if the YAML files are valid since they are validated by the sre-cli

The PR reviews are limited to double-checking that names, regions, IDs and other variables are correct. Simple as that!

Changing a project

Now the developers have tested their apps on these clusters and they decided that they need bigger machines for their clusters. What do they do? They just edit that YAML file, edit the machine, and run the “init” command again. The command will overwrite the HCL files. They open a PR again. SRE reviews again. Atlantis plans and applies the changes. Everything is done in less than 30 minutes!

Security updates on all projects

The SRE decided that they need to upgrade the Kubernetes cluster module to a new version that has some crucial security patches. What now?

- We implement our changes under owner/tfmodule-gke-cluster where our Terraform module for clusters is defined.

- We release a new version for that module, let’s say v2.0.0

- We upgrade the Jinja template for that module to use v2.0.0

- We commit the newest Jinja template to our repo and open a PR

- After the merge, we release a new version of our sre-cli Docker image

- We regenerate the files for all our YAMLs using the latest sre-cli. We open a PR for each project. We have automation in place for this, but it’s out of the scope of this article.

- PRs are approved and Atlantis will apply and automatically merge them

Considering that we also need to test these changes in our staging projects first, the whole update lifecycle lasts no longer than a couple of days. Not bad for a scale that big.

Q&A

- Q: Why don’t you open-source the project?

- A: Because we are heavily relying on our own private modules. These modules include lots of configurations that are specific to our use case. If we take the modules out, then whoever wants to use the sre-cli needs to implement their own ones, which is a big part of the functionality.

- Q: Can each team have different versions of the modules?

- A: Yes. Updates take place independently, but usually, we want all projects to use the latest versions. We have automation in place to update the projects but sometimes changes can break things and we need to hold them until we have the green light from the squads.

- Q: What other functionalities does the CLI include?

- A: We already mentioned the “setup” command which creates templates, and the “init” that creates/overwrites the HCL files from the YAML configuration files. We also have the same commands for the applications that we want to deploy on these clusters. Then we have another command that automates the process of regenerating the HCL files and opens a PR on GitHub, so we can avoid doing the same work repeatedly.

Would you like to become a Hero? We have good news for you, join our Talent Community to stay up to date with what’s going on at Delivery Hero and receive customised job alerts!